I don't think you TRULY grok the problem, so I'll try and lay it out just as simply as I possibly can. When AI finally "cracks the code" -- which it WILL do, whether it takes 25 years or 50 years to actually do it, it will be the death of every human on the planet. Period. Because we are not truly prepared, beforehand, with world-changing answers. Brain Computer-Interfaces (BCI) getting "brainy" in 10 years, and then 20, and then 30 -- leaving human brains behind because they are too biological; or Vicarious and DeepMind and a handful of others with deep pockets and full AGI on the agenda, including Chinese and Russian counterparts -- they will not be very interesting for 10 years or 15, of course that's too soon, but 25 or 30, arriving with a thunderous blaze of glory -- absolutely. I think you kind of get it at a very superficial, light level, which unfortunately allows you to be breezily and wittingly ironic, but I don't think that you really understand AT ALL that the whole human race will no longer exist. It's not necessary -- because a better race will be here, vastly better than anything before it, by a magnitude of 100x. It will blow the human race out of the water completely; it will not even be close. A new and better race of artificial intelligence will take over from here, and the human race is OVER.

And yes, I'm aware that I can no longer program in Python or Tensorflow very well now; I don't need to at all to understand the overall picture, in fact it gets in the way. It's really amazingly clear to me, from a big picture level, that AI is completely superior to human beings very soon (although exactly when is debatable for sure; I have one view which is shared by many; Steve Wozniak, Steven Hawking, Bill Gates, Bill Joy, Jurgen Schmidhuber and many other very smart individuals, who share exactly my concern), although I'm sure, for a lot of people, they're wondering what the hell I'm smoking. I get that -- it comes along with the territory, to be out front. But I'm telling you it will be here without any question, and if you value your very existence (without being ironic -- please), you will start thinking about it much more seriously. It's the greatest existential threat ever, period. It's up to you, each individual one of you, to think how it could be altered right now, before it's here, because when it ACTUALLY is here, it will be much too late.

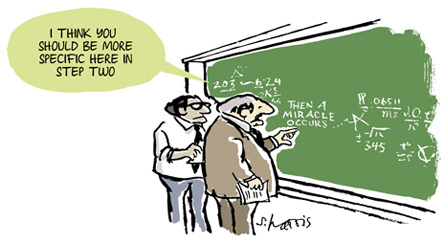

Never mind the tone, let's focus on specifics: Why ten years? Why twenty? Why thirty? Why a hundred times over? Why not a thousand? Why not ten? You keep pointing to the end. We keep pointing at the middle. You keep saying "but I am the mighty and powerful Wizard of Oz, look at the end." Read my lips, man - I have a little background in implantable devices. It taught me, above all else, that no matter how tightly your tech cleaves to Moore's Law, biology is biology and if you want your cyborg to retain an immune system you're facing a whole 'nuther regime of progress. And I recognize that "brain-computer interfaces (BCI)" has fuckall to do with AI... ...but I'm not sure you do. And no matter how many times we ask about the fuzzy shit in the middle, your answer is So just for the sake of argument, can you give this shit a try as if we're actually listening? As if we're actually trying to have a conversation? As if this is something more than you standing on a corner with a megaphone and a sandwich board? Please?Brain Computer-Interfaces (BCI) getting "brainy" in 10 years, and then 20, and then 30 --

It's not necessary -- because a better race will be here, vastly better than anything before it, by a magnitude of 100x.

Yes. In particular: "... if you value your very existence (without being ironic -- please), you will start thinking about it much more seriously. It's the greatest existential threat ever, period. It's up to you, each individual one of you, to think how it could be altered right now, before it's here... ".

If that's so, you really need to re-think your approach. Condescending to your audience is not effective, particularly when you've shown no reason for us to believe you know more about the subject than we do.

I honestly do not know what you're talking about. We maybe have a difference of view, or maybe we agree. Either way is fine with me, but if there is a difference in your personal case, please explain it, and I (and other people) will reason it out together. It's not "condescending" at all -- in fact the opposite.

As Isherwood said, the whole tone and way of communicating in your post is saying that your reader is dumber than you, doesn't understand it as well as you do, and needs enlightenment. You're assuming that you're smarter than literally every person who reads your post, and that you know more about AI risk than them as well. Meanwhile, you give an incredibly shallow description of the issue, but give no insight into what the current thinking on the problem actually is, or what any actual solutions might be. A good discussion wouldn't excuse the attitude of your post, but its absence certainly makes it worse.

This is condescension - you don't know what I know, but you're talking to me like I'm stupid, you're smart, and you need make your opinions as dumbed down as possible just for me to get it. That was your opening sentence and that's the tone that the rest of the article will be read it - not a great first impression and it certainly doesn't make me want to read on. As for the rest of your article, you just keep saying the same thing over and over again but using different words - AI is great and will kill us all - but you never actually say why. I mean, we're better than beavers in a lot of ways, but we still have beavers. When machines become super intelligent, why will they kill all humans? Are we a disease on the earth and the machines are the cure? Will they see us as a nuisance and need to get us out of the way? Will they be made for war and just get out of control and kill everything? I'm not hearing anything about the threat other than the fact that it's from robots.I don't think you TRULY grok the problem, so I'll try and lay it out just as simply as I possibly can.

Ok, lets say I'm wrong and it IS condescending in some way. I really don't think so -- I think it is exactly the way that I would want to be talked to myself. It's just saying what we really mean: it's uplifting actually. But nonetheless, perhaps I'm wrong anyway -- disregard that for now, because I really just want to talk with you as equals. We're all friends here -- alright? And I do have some experience -- I have been CEO or CTO for a long time, including for several years as CEO consulting on AI, robotics, and nanotechnology topics, and previously CEO for 8 happy years at a personalized voice recognition company, where I had customers including Costco, Lowes, Crate & Barrel, Buy.com, and several others, and where we were bought for a lot more than $3 million which I have personally raised myself. So lets just say that I am sorry if I got you off on the wrong foot -- I'm truly sorry -- but aside from that... Nonetheless, clearly Elon Musk and Stuart Russell (professor of AI at CAL) agree with me. Bill Joy and Bill Gates and Steven Hawking and Steve Wozniak agree with me too, some of the greatest minds that exist today -- and lots of them were superb programmers in their day, absolutely world-class. And many others -- this is not a new argument. Unless we get together and change things drastically, which is very unlikely to happen unless WE personally do it, we're set to go back to the literal stone age pretty quickly, within 20-30 years, because of the military AI arms race, which is unlike anything you have seen before -- programmers wrist, 20 million individuals, rather than top-secret nuclear arms, which a truly tiny percentage of people have. Do you think I am wrong?

First, saying what you really mean and being condescending are not mutually exclusive. Second, it's cool you were CEO and sold your company but I don't see how that matters - if your argument is that all of humanity will be dead in 20 years, I'm going to need you to make a case for that. I have yet to hear anything of the sort. As for your question - do I think we're going to go back to the stone age in the next 20-30 years? No, I don't. I just haven't heard a compelling explanation of how it's going to happen. I mean, you hint towards a military AI arms race - but I don't understand how that's different than any other arms race. We had this same discussion in the 50's about nukes but we're still here. And yes, that's because of regulation, but you have yet to mention that anywhere in this thread either, so I'm not going to just assume that's what you're talking about - once again, I don't know you and I'm not going to make a case for you. But also, when you say AI and robots are better than us - what do you mean? Better at what? At killing us? Certainly, but let's say they do that - then what would happen? What would they be doing on the earth that's better than us? Planting crops? Raising cattle? Having war? Being the stewards of the earth in preparation for the second coming? Or is this a skynet secnario where we only program "kill" and nothing else? Or, if we program an AI that's smart enough to kill everything on the earth except for itself, why is it not smart enough to question it's motives? To wonder basic questions like "what's the point of all this?" You haven't made a point in your arguments as far as I can see - you haven't even made a suggestion of what WE can do (just that we have to do something). The only thing you've done is flung open a door and demand that all of us cower in fear. I know you don't think that's what you're doing and maybe in your head you have a cohesive and solid argument but it's not coming out in your words, and that's how it ties back to your condescension - what you think you're saying is not what we're hearing. You can hem and haw about how it's not your intent, but the number of people who have pointed out the same issue should be an indicator that it might actually be a real issue.

(The note on reddit . com, just now): I am -- because of military AI and it's utterly devastating arms race, which will have to conclude with our destruction, although otherwise I would just say that humans and robots would live together in harmony. But I should have made that clearer -- my mistake. No, to answer your questions, AI is wiser than us (generally, not in particular cases, unfortunately). It's really ANI for the military case, not AGI, but that does not mean it can kill us very effectively. It can and it will. Because all it takes is a programmers wrist (I should have made that clearer too), which is very, very plentiful, including our direst enemies, like ISIS, al-Qaeda, and future terrorists of all stripes.

But some soldier (well, two soldiers) in nebraska or siberia could launch a nuke and kill us all. Why is AI more dangerous? And if it's an arms race, then there won't be just one side with access to the weapons and, like all war, attack will become a cost benefit analysis in the face of retribution. Why won't there be counter AI to fight off attacks? And we're a million times smarter than ants, right? So why are there still ants? What are the machines going to see in us that means we should all die? I've asked that question three times now.

Well I have answered it a couple of times too :-) but lets try again with a fresh take. First off, that precisely my point about a couple of soldiers in Utah or Nebraska firing off nuclear weapons. They are very specialized soldiers, with very special training, at the time, and it takes two of them to do it AT THE SAME TIME, and a host of protections to back it up. But one programmer from ISIS can do it immediately, and there are literally infinitely more programmers who can do it in a heartbeat than highly specialized, trained soldiers with specialized equipment to back them up -- that's the entire point! It's really important that a programmers shady arm from around the world (there is no concept of place in this internet-world) that can do the things that used to be specialized soldiers prerogative. And the defense will be no use at all, because soon, there will more than just one little programmer, but an 10,000-strong army, that can wipe the floor with any army for the first quarter hour, which is all it will take to take us back to the stone age. That's one of the many things I'm afraid of now -- it's not like it was when it was one big army against another -- that could be handled. This cannot.

...look. SAC is running 8" floppies. 'member Wargames? There's a reason they started it out with Michael Madsen failing to launch - they needed to wave hands and get rid of all the humans in the silos. But it's been 35 years and the humans are still there. The tech has not changed. The doctrine has not changed. The Soviets probably built something Strangelovian but it's about as smart as an automatic transmission. More than that, you're not talking about a malevolent AI, you're talking about an ISIS hacker. And okay- maybe your argument is ZOMG ISIS could hack our silos or something. But you can no more hack a missile silo than you can hack a garbage truck. It's not an access problem, it's a sophistication problem. Never mind the airgap. There simply isn't the instrumentation or automation that would allow a garbage truck to so much as malevolently drop a dumpster. And that's at the heart of most of your worries - oh shit an AI could take over the world and wipe us all out. But that pesky "take over the world" part is messy, man. It really is. There ain't a lot of the world that's automated and that's not likely to change - "automated" is very different from "mechanized" or "computer-controlled" or even "digital."

I mean, I heard this level of fear around nukes, and I'm glad they have regulations and I think regs should be on any weapon, but the situation you lay out just seems like sensationalism and I don't see how it's different than other weapons of mass destruction. And we haven't had total war like you're describing since WWII - and at the time it couldn't be handled, no one knew what to do about nukes, or chain guns, or tanks. War is a never ending series of escalations that we're never ready for and our lack of preparedness pushes us to new escalations. I get that it's scary but it seems like fear mongering. But, if it's not, what's the suggestion to stop the world from ending?

Lol exactly. He can't "grock" the difference between speculation and fact. He doesn't seem to understand social norms or be able to understand basic social cues. He either doesn't read comments closely or has a a reading disability or doesn't know the recent history of some very basic A.I. (self driving cars). He often seems unable to distinguish between criticism of his manner and the material he is presenting (as if the material and his behavior are the same thing. Taking criticisms of his behavior as disagreement with the matetial). He is a super strange person who seem to either have a screw loose or is lingering on the far edge of the functional spectrum. Hubski's A.I. Pope is super bizarre.

There are a handful of hubskiers I know of, myself included, who have done/are doing real AI work. I've been trying to ignore this dude's threads, but I'll point out that everyone else I know of who knows their shit hasn't bothered to comment in them either. Real-world AI is mostly boring, because things you can automate are always boring. PROFOUND/SCARY AI THING articles are always more science fiction than science.

You know it's quite hilarious -- my facebook friends (and I only have a few hundred, my actual friends) include Oren Etzioni and Ben Goertzal and Roman Yampolskiy and Toby Walsh and Sebastian Thrun and Rob Enderle and many others -- it's you who have your heads on backwards. I humbly suggest you rethink your position -- it's really not going to work any more.

Elaborating now that I'm not half-asleep, AI algorithms are either automating logical inference or automating statistical inference or a mixture. You can call a spam filter AI if you want and no one will call you on it, but it's just boring old hypothesis testing if you do what it does with pencil and paper. Likewise self-driving cars aren't different in kind from missile guidance systems, they can just do more because we have better computers. AI is more who you studied with, how you want to think about the problems you're solving and, less benignly, how the marketing people want to talk about the problems you're solving than a distinct kind of technology.

I'm not entirely sure how to answer that, but if you wikipedia Kalman Filter and follow along you're a couple of months from following Sebastien Thrun's book and the rest depends on which side of the "DARPA got us this far" and "google has hookers and blow" you fall on.

I use grock correctly in a sentence (with quotation marks because it's silly) and you have to act like I'm some kind if big dummy letting me know it a "real" word used by Heinlein. For some reason you just have to assume that every one is an ignoramus even when all the evidence is to the contrary. I know my Heinlein, I even remember how to drive the tractor.

That's precisely it -- it really is fundamentally different, and when you truly grok that about AI and AGI, you'll be the same as me; horrified beyond belief. It's funny, because I used to be exactly like you, skepical of off-the-wall theories, and very confident we could do something, even if it would not be known right now. But about 8 years ago I realized that AI was totally different, a whole new threat, and this time it was terribly real, like nothing that has ever been seen before, ever. This was a fundamental advance, and it WILL be incredibly beneficial and useful without a doubt, but the downside is equally horrific -- literally the end of this world in our lifetime. I reasearched it for many years before I came to this conclusion -- it was not a half-assed remark or sassy conclusion by any means, and I really feel like you better research it too, more closely. The programmers lazy arm in ISIS, typing out instructions for the end of this world. It's 100000 times different and easier than a soldiers iron specification, and it will be the death of us. There IS NO SOLUTION -- so we have to think differently, and maybe -- maybe -- we can come up with something meaningful.

Hahaha... I understand; it takes awhile to really understand so don't worry. And BTW Bostrom is talking about control, not actually really sticking to a paperclip maximizer... You know I actually majored in Philosophy and Math at Berkeley, although Math was always my specialty. I was quite good :-)

You sure do like moving the goalposts, don't you? So you grok this all so well but can't offer a solution? Not really making a good case for why we should listen to you on this issue. Earlier, you said: From reading your argument, you seem to have totally internalized its underlying assumptions, but skip over the part where you support them. Why will it be military AI? How will ISIS or some other such group have access to it? Why will their version be able to defeat one developed in the US? Why would an AGI do what they tell it to (or us for that matter)? Also, you still haven't answered my earlier comment asking why you think you've said anything many of us haven't already thought about.when you truly grok that about AI and AGI, you'll be the same as me; horrified beyond belief.

There IS NO SOLUTION -- so we have to think differently, and maybe -- maybe -- we can come up with something meaningful.

It's really ANI for the military case, not AGI, but that does not mean it can kill us very effectively. It can and it will.